The Galileo Luna is a groundbreaking advancement in language model evaluation, specifically designed to tackle the issue of hallucinations in large language models (LLMs). Hallucinations, where models generate information not grounded in context, present a significant challenge in using language models for industry applications. The Galileo Luna serves as an Evaluation Foundation Model (EFM) dedicated to ensuring high accuracy, low latency, and cost efficiency in detecting and addressing these hallucinations.

Large language models have transformed natural language processing with their ability to generate human-like text. However, the issue of hallucinations, where models produce incorrect information, can undermine their reliability in critical applications like customer support and legal advice. Various factors contribute to hallucinations, such as outdated knowledge bases, randomization in responses, faulty training data, and new knowledge integration during fine-tuning.

To address these challenges, retrieval-augmented generation (RAG) systems have been developed to incorporate external knowledge into LLM responses. Despite this, existing hallucination detection techniques often struggle to balance accuracy, latency, and cost for real-time, large-scale industry applications.

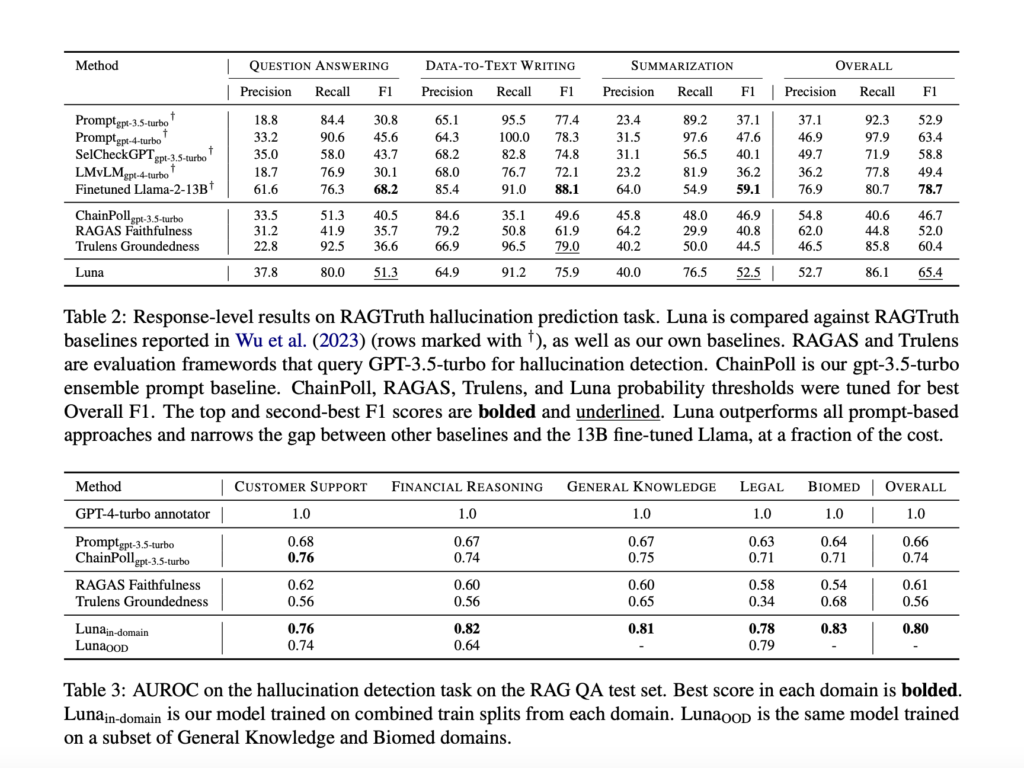

Galileo Technologies has introduced Luna, a specialized DeBERTa-large encoder fine-tuned to detect hallucinations in RAG settings. Luna excels in accuracy, cost-effectiveness, and rapid inference speed, outperforming models like GPT-3.5 in performance and efficiency. Luna’s architecture, based on a 440-million parameter DeBERTa-large model, is designed to handle long-context RAG inputs across multiple industry domains, making it versatile for various applications.

Luna boasts several breakthroughs in evaluation with its high accuracy in detecting hallucinations, prompt injections, and PII detection. It offers ultra-low-cost evaluation, reducing costs significantly compared to other models. Luna also ensures ultra-low-latency evaluation, processing tasks in milliseconds for a seamless user experience. Additionally, Luna eliminates the need for ground truth test sets by utilizing pre-trained datasets, making evaluations immediate and effective.

The model’s performance and cost efficiency have been demonstrated through extensive benchmarking, showcasing a substantial reduction in cost and latency compared to other models. Luna’s ability to process a large number of tokens in milliseconds makes it ideal for real-time applications like customer support. Its customizable nature allows for fine-tuning to meet specific industry needs, enhancing its utility and effectiveness across different domains.

In conclusion, the introduction of Galileo Luna represents a significant milestone in evaluation models for large language systems, ensuring reliability and trustworthiness in AI-driven applications. By addressing the critical issue of hallucinations in LLMs, Luna sets the stage for more robust and dependable language models in diverse industry settings. Please rewrite this sentence.