Language models are essential for modern AI systems, allowing machines to understand and generate human-like text for various applications. However, developing these models faces challenges due to the high computational and memory resources needed.

One challenge in language model development is balancing complexity for intricate tasks with computational efficiency. As demand for more sophisticated models increases, so does the need for powerful computing solutions. Transformer-based models have been effective in addressing these challenges by attending to different parts of text to predict what comes next, but their reliance on large resources can limit their practicality.

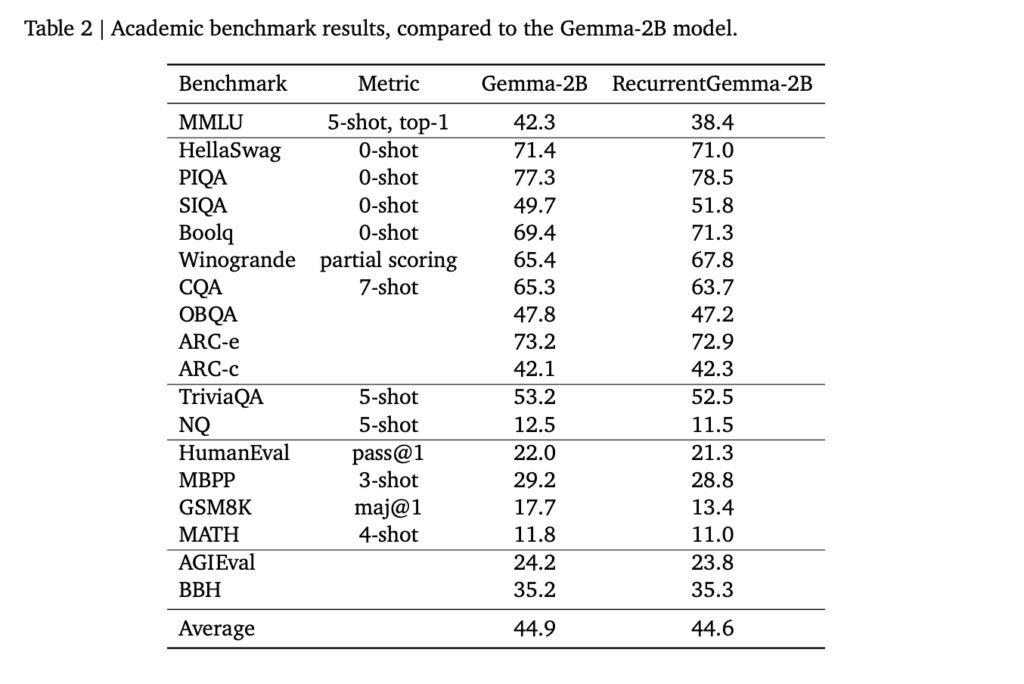

A research team from Google DeepMind has developed RecurrentGemma, a language model incorporating the Griffin architecture to reduce memory usage while maintaining performance. This model compresses input sequences into a fixed-size state, allowing faster processing speeds without sacrificing accuracy. RecurrentGemma outperforms its predecessors in terms of memory usage and processing speed, making it ideal for tasks involving lengthy text sequences.

RecurrentGemma achieves state-of-the-art performance while enhancing inference speeds, with the capability to process sequences faster than traditional models. Its innovative architecture and efficient memory usage make it suitable for various applications, particularly those with limited resources.

Overall, RecurrentGemma presents a breakthrough in language model development, demonstrating that efficient and high-performing models can be achieved without extensive resource demands. This model holds promise for future applications that require processing lengthy text sequences swiftly and accurately.

I have a strong passion for technology and aspire to develop innovative products that can make a meaningful impact.